I have been fascinated by virtual reality (VR) for a long time. I saw it being developed over time, getting more sophisticated every year, enabling users to navigate in increasingly complex worlds using increasingly natural user interfaces.

For a several years, I have wanted to get a nice set of VR equipment for our research lab. We had a few ideas we wanted to test, and VR offered the perfect setup for that. The difficulty has always been cost: to get a nice system, for instance a cave with good tracking and interaction devices, we needed to spend several tens of thousands of dollars. We thought that was expensive, and were are not alone: occasionally, I heard users talking about VR, and saying how much they would like to walk virtually in their models, for design reviews, for showing to clients, etc. But they also hit that cost obstacle, and most of the time did not go through that expense…

A few years ago, as I was attending a scientific conference in Louisiana, I had the chance of visiting a nice research center where they had a lot of nice and fancy virtual reality equipment. The visit was truly amazing. One asset that caught my attention was a nice 5 face cave, I am not sure but I think it could be changed to 6. That was truly fantastic: I walked in a virtual model, calibration was perfect, and speed of response to my position in the cave was excellent. Rendering quality was very good and well synchronized. It was the perfect cave. After trying it, I walked around briefly, and saw the infrastructure supporting it: high definition projectors with mirrors, polarization preserving projection surfaces, and a rack of servers for rendering the scene. Of course the cave was big, so it took more than one floor of that building to accommodate the projectors for the top and bottom faces. I then asked one of the Ph.D. students in charge of running the demo how much such a system cost. He said: “With or without the servers?" I answered: “With.”. He said: “Hmmm… approximately $6 million.” Do I need to tell you that back at the office the following week, regardless of how much I loved that cave, I did not put in a $6M purchase request…

Because of the cost barrier, we have not done much work in VR during the past years, apart from a very basic model visualization experiment running on an Ultra Mobile PC, that involved the use of an orientation sensor – the same kind of semi-immersive orientation-based visualization that is now commonly available for tablets. Since then, general interest for VR has faded a bit. We recently passed a point where the number of web searches for Augmented Reality, constantly increasing, has overtaken the number of web searches for Virtual Reality, constantly decreasing. Maybe the cost of equipment has something to do with that. But in reality, VR has is not disappearing – it is just taking shape into different implementations and taking different names, for instance: computer games (immersive, interactive, complex virtual worlds, etc.).

Now during the past year, new pieces of hardware have become available. The first one is the Occulus Rift, a head mounted display equipped with an orientation sensor. At the moment, it is only available as a development kit, its resolution is good but not quite sufficient for good VR, and the shape / material of the device may have to be improved a bit, to accommodate comfortably many face shapes. It is a just a commercial prototype, but considering its price (less than $400), it is quite amazing!

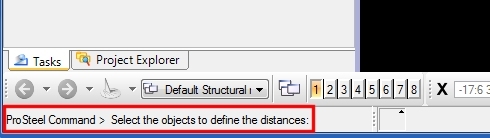

![]()

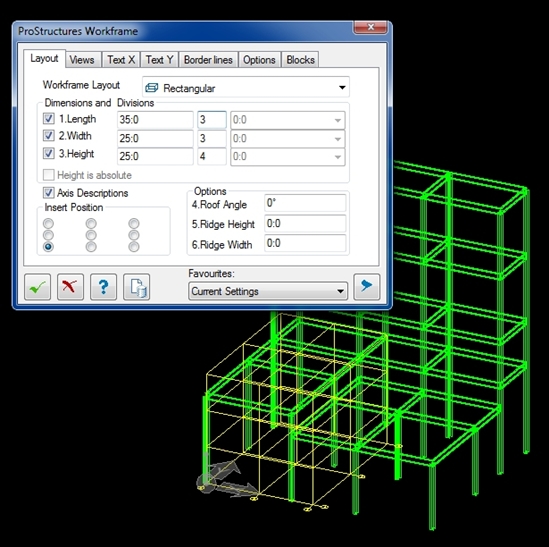

The second one is the Razer Hydra, a 3D game controller. For just a little over $100, you get a pair or handsets with buttons and joystick that are tracked in 3D (position and orientation) within a radius of about 2 meters. Again, its accuracy is nothing compared with true VR tracking systems, which can be millimeter accurate, and work in much larger areas. But again, considering its low cost, that is quite a nice little device that can seriously be considered for low cost VR experiences. These new pieces of hardware were enough to get us started…

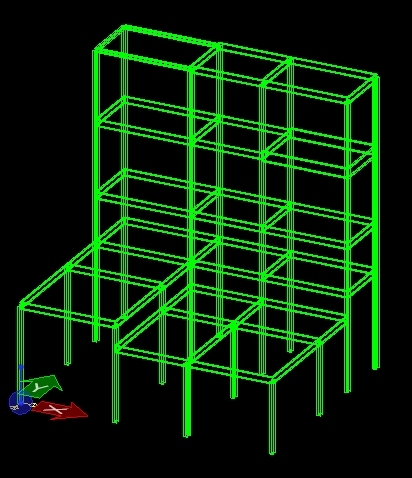

![]()

I always thought that VR would be cool to try on large infrastructure models. Walking (or flying) through a model would be useful for getting a better feel of a model. But our recent work on data alignment and intelligibility prompted us to evaluate the potential of VR for something even greater: data understanding. Engineers use various data: 3D models, 2D drawings, spec sheets, site notes, live measurements, etc. That data is important for their work. Unfortunately, it is often used in isolation. We think that putting various sources of data together, giving them a context, would bring more value and enable us to do more with the same data. Our recent work on augmented reality (Ref1, Ref2, Ref3, Ref4) seems to confirm that. After all, what is the value of a spec sheet if one does not know what device it refers to? What is the value of a 2D drawing out of the context of the 3D model that goes with it?

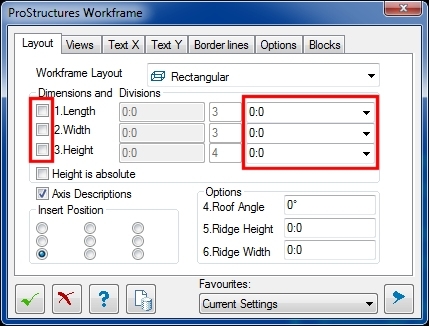

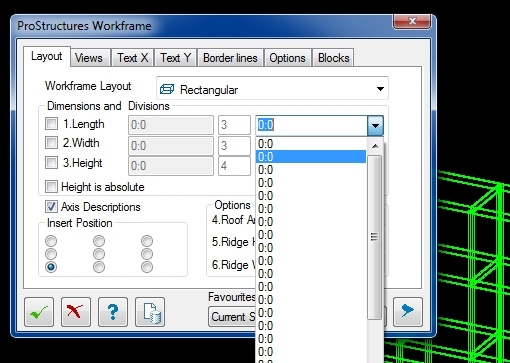

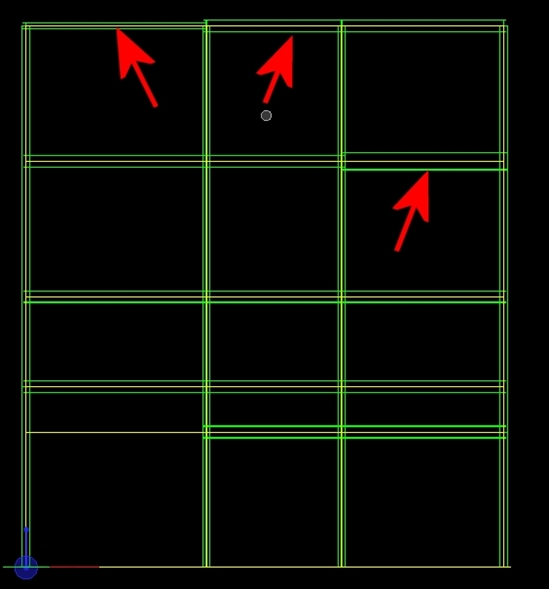

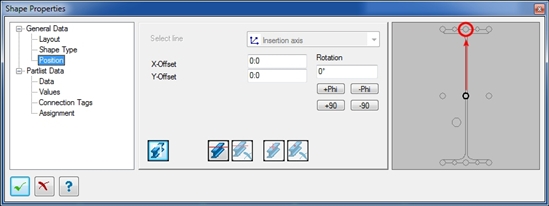

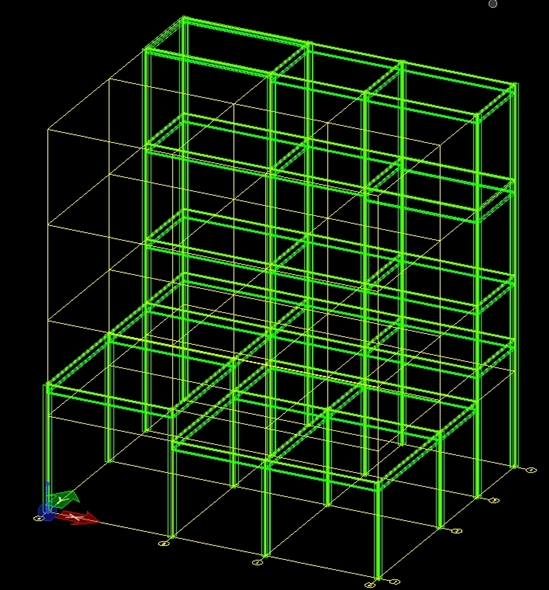

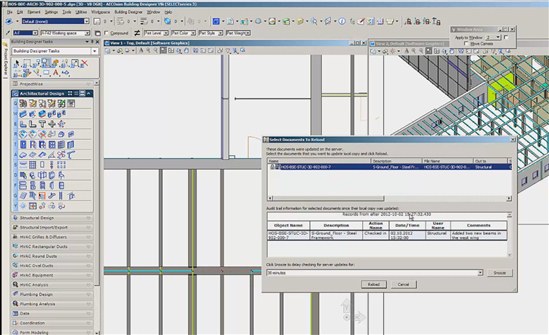

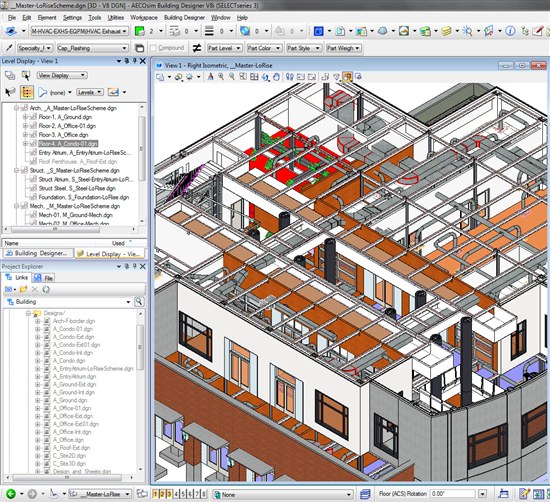

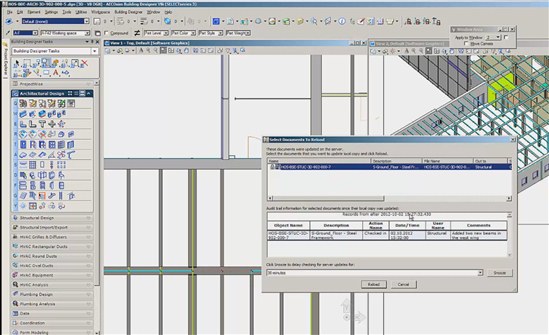

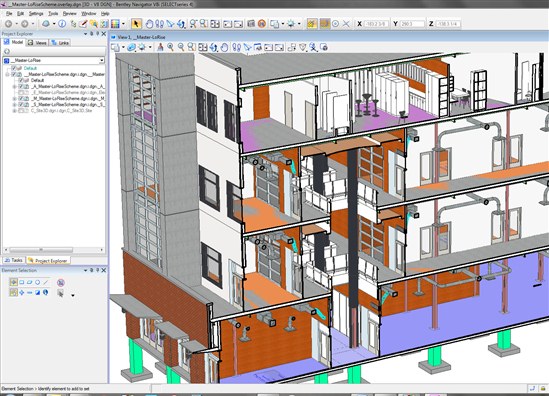

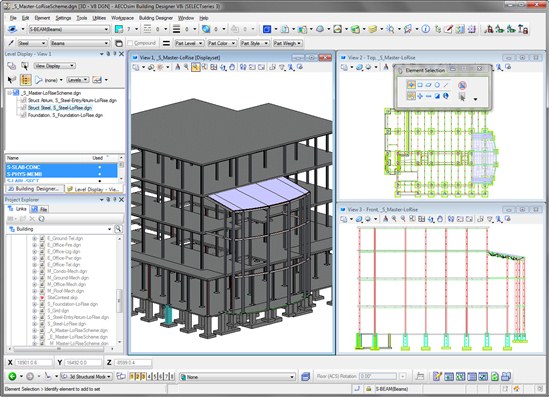

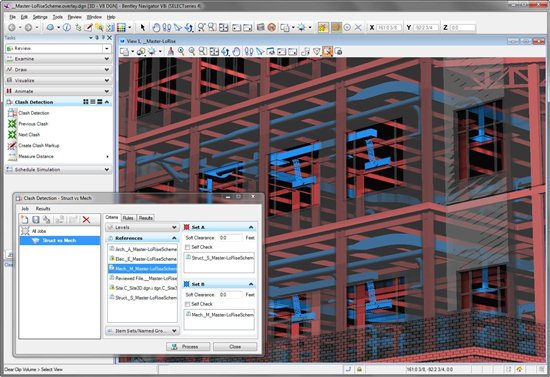

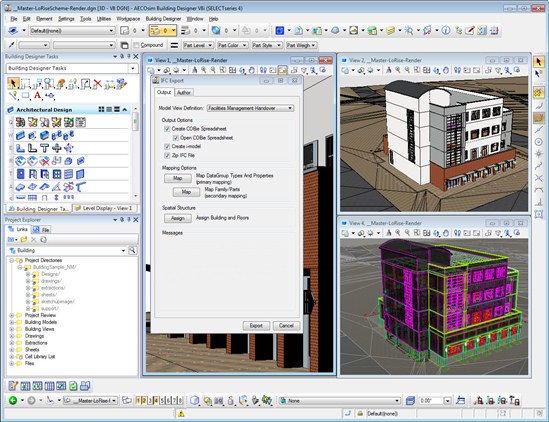

For our experiment, we used a dataset related with a building, composed of panoramic photos captured along a path inside the building, a point cloud captured of the interior, another (simulated) point cloud representing the structure inside the walls, a detailed 3D CAD model, and some metadata related with elements of the model. We then aligned all those datasets. This way, by switching from one dataset to the other, the user would not feel disoriented. Our goal was to create a virtual environment in which the user could navigate and look at the data from various positions and in various combinations. Our hope was this would provide ways of exploring the data in a new way, and perhaps open new perspectives for data visualization and intelligibility.

We used the Occulus Rift for visualization, and the Razer Hydra as a 3D interaction device with the virtual world. The result is a VR experience, which of course cannot be rendered here. Unfortunately, the best alternative is to show you a video. It is nothing compared with wearing the HMD, but you still shall be in a position to get an idea of what would be possible.

(Please visit the site to view this video)

The first part of the video shows engineering data visualization using a natural interface: panoramic images, point cloud, and 3D model. The second part of the video shows combination of data: showing pipe elements hidden behind walls, or seen through point cloud, or seeing the laser scan acquired during construction through the model walls, or even engineering data related with elements - possibilities are numerous. By displaying the data in the context of other data, users can see potential relationships, such as the actual location of a stud (scanned during construction) with respect to a window frame, or engineering data related with an actual ventilation duct that the user sees in the panoramic photo, etc.

In conclusion, this is positive. The system works, navigation is easy, and much more natural than using standard computer input (keyboard and mouse), which makes the system fun to use. Querying objects by clicking on them on a photo is much easier than finding an entry in a database through text searching. Data alignment and combined visualization enabled us to see correspondences that could not be seen otherwise.

Of course, this was just a preliminary study, aimed at verifying the potential of such an application. We could easily enhance the system by letting it query databases or display live data upon clicking on an object, let the user make measurements, display pipe content and flow direction, or spec sheets, basically any source of data related with the asset. Note that the system is VR, so it can be used anywhere for viewing the data, for instance for training, work planning, or just data exploration. But it could also be used on site, at the location where the data was captured! Displayed on a tablet for instance, one could display the portion of the point cloud that corresponds with what he sees in reality, and query physical objects by clicking on their point cloud representation on the tablet screen. This would be similar to Augmented Reality, but would not require fast and accurate tracking algorithms as is normally required to overlay models with the physical world.

We are intrigued by the idea that people assemble many types of spatial information together, and then use that space as a space for action. When we provide techniques within spatial information for people to say to each other, "look at this, and (please) do this.... and here’s what I saw, and this is what I did…" then spatial information becomes a place for action, where people think and communicate, through focus, clarity, instruction, direction, affirmation, and so on.

The Occulus Rift was developed with games in mind. It interesting to note that the gaming industry, towards which a lot of VR work has found application, may actually save the world of VR from sinking further and get more interest again. Future will tell. For sure, this is only the beginning of a new era in engineering data visualization and exploration.

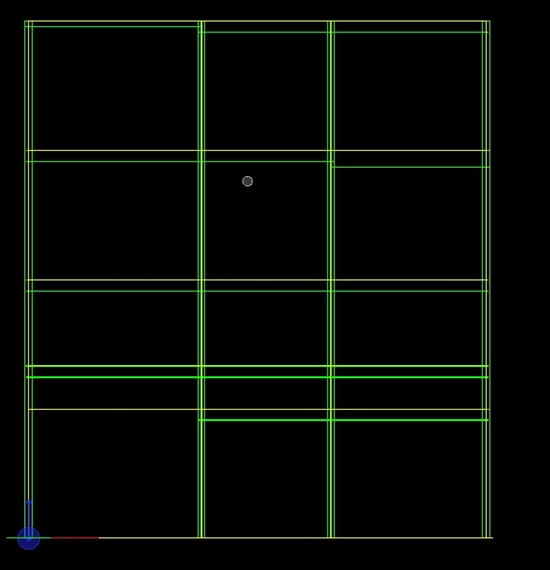

Many thanks to Chuck Fields, owner of the Paddy Wagon Irish Pub in Richmond, Kentucky, for giving us access to his building and permission to share our results!

![]()